Introduction

The generative AI transformation has been rapid over the past 12 months, quickly evolving from parlour trick to commercial ‘force multiplier’. As companies grapple with the opportunities and risks, it is vital that boards understand their unique role in governing the adoption of these new technologies, how generative AI fits into existing risk management frameworks, the capabilities needed to optimise opportunities, and the questions they should ask of their executive teams. We have been here before; there is much we can learn from previous periods of innovation, such as the widespread adoption of computing, the rise of the internet and the shift to cloud computing. Now, as in these previous periods of transition, the board’s role is key and requires adjustment in terms of knowledge, composition, and ways of working. As regulators hone their focus on board governance of cyber-related issues, and generative AI’s development continues at speed, it is important boards don’t delay; now is the moment to seize the mantle on generative AI.

This Savanti board insight focuses on:

- The opportunities of generative AI,

- The risks of generative AI,

- The capacity needed to optimise the opportunities of AI, and

- Questions that boards should ask of their executive teams.

Opportunities

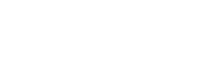

The opportunities of generative AI are enormous; they go beyond the obvious use cases of helping you to write an email (LLMs/language and chat) and generating images (AI creativity) to the range of examples shown in the diagram below. Generative AI is improving technologies, such as virtual assistants, and democratising access by providing an expert interface for non-technical users. The impact of tools like ChatGPT for software engineers and knowledge workers has dominated discussions on AI during 2023, pushing generative AI to the peak of ‘inflated expectations’ of the hype cycle,[i] but there are many real opportunities to be found.

[i] What’s New in Artificial Intelligence From the 2023 Gartner Hype Cycle™, Gartner, 2023

Diagram 1: Use cases for generative AI, FSP 2023

Uptake of generative AI is growing; in a recent McKinsey poll, 79% said they have had at least some exposure to generative AI, either for work or personal use, two-fifths (22%) said they are regularly using it in their own work, and 40% said their organisations will increase their investment in AI as a result of advances in generative AI. The business functions most actively using these technologies are marketing and sales, product and service development, and service operations, such as customer care and back-office support.[i]

There are early signs that adoption of generative AI adds financial value; it is estimated that tech companies using it add value equivalent to as much as 9% of global industry revenue, banking 5%, pharmaceuticals and medical products 5% and education 4%.[ii]

Boards have an important role to play on the opportunity-side of generative AI; by asking targeted questions about how generative AI can redefine current business models or disrupt markets, they can push the executive team to think strategically. It is estimated that AI use is on the agenda for just over one-quarter (28%) of boards at present.[iii]

Opportunity-related questions that boards should ask of their executive teams:

- Current opportunities:

- How is generative AI currently being used across the value chain?

- Unlocking opportunities:

- Are there projects or initiatives that were previously side-lined due to resource constraints that are now viable as a result of generative AI?

- Future opportunities:

- How could generative AI impact or disrupt our business model and market?

- What new markets and services could be created or disrupted by these technologies?

- What innovations using generative AI could take us into new products, services, or markets?

- How can we safely test these opportunities?

Risks

Boards and executive teams must balance the opportunities of generative AI against the inevitable risks. Data suggests this is work in progress for most boards; many board members say they lack the necessary understanding of generative AI, [iv] and only one-fifth (21%) of survey respondents said their organisations had established policies governing employees’ use of generative AI.[v] Boards need to decide how to incorporate AI into their risk register, who will own cross-functional AI-related risks, and how to ensure those risks are quantifiable and trackable. Existing risk and cyber risk management frameworks are actively being developed to address AI risks, for example the NIST AI risk management framework,[vi] but AI risks should be integrated within existing risk management processes, incorporating frequent review to keep pace with rapid developments in this area.

The most common risks associated with generative AI are:

- Environmental: How to mitigate the environmental impact of the significant energy and carbon requirements of generative AI; it takes significant energy to train and run generative AI models.

- IT and technology: the costs associated with developing and running generative AI tools can quickly spiral. As a developing technology, the availability of solutions may change quickly.

- Cyber security: As generative AI is all about consuming and creating data, cyber security governance and hygiene is foundational. Over half (53%) of organisations acknowledge cyber security risks stemming from generative AI, but only 38% are working to mitigate those risks.[vii]

- Governance and resilience: regulatory requirements and political positioning on generative AI are shifting, and organisations also need to be prepared for the unintended consequences of the use of generative AI.

- Privacy and surveillance: organisations who share data with generative AI tools in order to train the models often do so without fully understanding how their information will be used or where it will go. This includes data relating to people, including staff and customers.

- Intellectual property: IP owners may inadvertently share protected IP with generative AI tools, and content creators may find their generative AI-produced products contain IP owned by other organisations, opening them up to liabilities.

- Inaccuracy of data: the accuracy of output depends on the accuracy of inputs. Only one-third (32%) of organisations are mitigating the risks of inaccuracy, the risk they consider to be most relevant for them.[viii]

- Fraud: generative AI lowers the barrier to creating fraudulent identities, documents, or transactions at scale; organisations must ensure that detective controls are fortified against this developing threat.

- Talent: there will be a premium on skilled talent; there is a risk of talent flight for companies slow to adopt generative AI, and a risk of skills erosion for those who become too dependent on these technologies.[ix]

- Bias and discrimination: generative AI models based on imperfect historical data run the risk of exacerbating in-built bias, and as the models develop there is the risk that they nudge users into biased or discriminatory decisions.

Risk-related questions that boards should ask of their executive teams:

- How should we integrate generative AI into our existing risk management framework?

- How will we ensure cross-functional AI-related risks are quantifiable and trackable and clearly owned at leadership level?

- What specific risks do we currently face, and what mitigating factors do we have or need to develop to manage them within an acceptable risk threshold?

Capabilities

Realising the opportunities of generative AI depends on having the right capabilities in terms of talent, technology and organisational culture.

Capability-related questions that boards should ask of their executive teams:

- Where within the organisation are our relevant skills and capabilities located?

- How is the organisation developing those skills and capabilities?

- If we rely on partners or outsourced solutions for those capabilities, how do we safeguard key information assets, such as customer data and intellectual property?

- Do we have the technological infrastructure capable of hosting generative AI models? Should we buy them in or build them ourselves?

- What KPIs and metrics will we use to understand the progress and value derived from generative AI?

- What due diligence capacity do we have to help us make sense of the generative AI opportunities we are presented with?

- Will our organisational structure help or hinder our pursuit of generative AI-related opportunities?

Questions for boards to ask of themselves

As boards ready themselves for their vital governance role in relation to generative AI, they must also ask questions of themselves:

- Do we have appropriate knowledge and understanding within the board?

- Do we have the right members around table?

- Are we incorporating generative AI opportunities into our own board working practices?

Conclusion

Generative AI has the potential to redefine technological and organisational landscapes. In such era-shaping moments, the role of boards is critical; they both push the executive to think strategically about the opportunities on offer, and ensure the company has a comprehensive understanding of, and framework for managing, the inevitable risks that result. This paper provides a framework for boards and a practical toolkit of questions to pose their executive teams to set them up for success in their adoption of generative AI.

[i] What’s New in Artificial Intelligence From the 2023 Gartner Hype Cycle™, Gartner, 2023

[ii] The State of AI in 2023: Generative AI’s breakout year, McKinsey, 2023

[iii] The State of AI in 2023: Generative AI’s breakout year, McKinsey, 2023

[iv] The State of AI in 2023: Generative AI’s breakout year, McKinsey, 2023

[v] Four essential questions for boards to ask about generative AI, McKinsey, July 7, 2023

[vi] The State of AI in 2023: Generative AI’s breakout year, McKinsey, 2023

[vii] AI Risk Management Framework | NIST, NIST, 2023

[viii] The State of AI in 2023: Generative AI’s breakout year, McKinsey, 2023

[ix] The State of AI in 2023: Generative AI’s breakout year, McKinsey, 2023

[x] How will ChatGPT and Other Generative AI Impact Leadership? Russell Reynolds Associates, March 15, 2023

Savanti is a leading UK cyber security consultancy whose clients include FTSE 100 companies, medium-sized enterprises and public sector organisations. Savanti was recently acquired by FSP Consulting Services (FSP). Founded in 2012, FSP are a leading digital transformation specialist, combining real world experience in business strategy, change and adoption and digital solution delivery. As a long-standing Microsoft Solutions Partner, their portfolio of modern workplace, cloud, data, and cyber security offerings, alongside trusted managed services delivery, is driving change for high-profile clients in both the public and private sector. Their work is founded on the commitment to deliver positive impact for both organisations and their people. FSP are proud to be a multi award-winning workplace, most notably recognised by Best Companies™. More information can be found at FSP

Written by Tom Hebbron

For more information on Savanti’s board advisory service or AI contact Tom Hebbron or add your details below: